What is heatbleed?

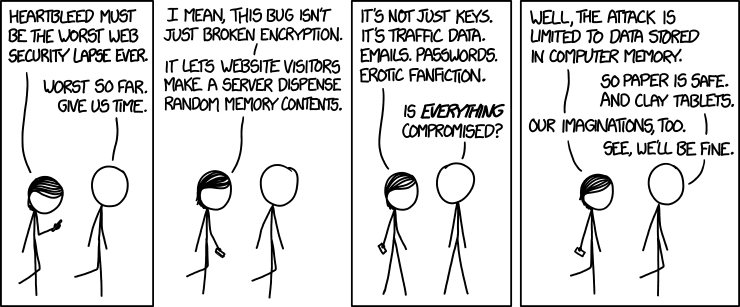

The Heartbleed Bug defines this bug as “[A] serious vulnerability in the popular OpenSSL cryptographic software library. This weakness allows stealing the information protected, under normal conditions, by the SSL/TLS encryption used to secure the Internet.” A lot of big security experts have called this bug the biggest security issues of the internet to date. It basically allows anyone on the internet to read a chunk of memory that OpenSSL uses to keep your stuff protected. This means your usernames, password, content, and even worst, the key that is used to encrypt all these information can be the object to this attach. If the attacker gets that key, they will then be able to read anything that OpenSSL tries to “hide”. Furthermore, OpenSSL is one of the most widely used encryption tool on the internet.

So all this sounds like a new thing that people usually find out when some hacker hacks a big server. However, this flaw has been around since 2012 and nobody knew about it until about 2 weeks ago when this bug was independently found by Neel Mehta, a Google Security engineer and a group of security engineers at Codenomicon

What did they do?

As far as I know, what they did is to report it to NCSC-FI and the OpenSSL team and somewhat publicized it. This caused all the big server holders such as Facebook, Yahoo, Microsoft, and etc. to solve this issue because now everybody knew about it. Five day after discovery of the bug, a this list was released containing the top 1000 sites and whether they were vulnerable or not. 48 of these websites were still vulnerable at that point of time. Among these vulnerable websites, we can see some of the big server holders such as Yahoo!, stackoverflow, and Flickr!

Ethical issues:

The main question that we can ask here is who to blame here? One answer could be that the people developing the OpenSSL are the people to blame. PCMAG writes about Robin Seggelmann, a programmer who uploaded the code with the heartbeat request feature on Dec 31, 2011. Seggelmann says “I am responsible for the error, because I wrote the code and missed the necessary validation by an oversight. Unfortunately, this mistake also slipped through the review process and therefore made its way into the released version.”

Another question can be who was taking advantage of this bug since it was out there for about two years?

As Bruce Schneier mentions in his blog post “[a]t this point of time, the probability is close to one that every target has had its private keys extracted by multiple intelligence agencies.” Supporting Schneier, Electronic Frontier Foundation (EFF) mentions two stories in this article about how the evidence show the possibilities that an intelligent agency could have been taking advantage of this bug all along.

I think if such thing is true, it is completely unethical to do such thing. This is like a company finding a way to access its employee’s data and instead of fixing the issue, taking advantage of their own employers. What do you think?

3 Responses to Heartbeat request caused a “heartbleed”